The thinking of AI

In the 17th century, Leibniz hypothesized

that there would be a general scientific language that could compute reasoning

processes using mathematical formulas. With the invention of computers and the

prevailing of automation, artificial general intelligence (AGI) has once again

drawn attention, and one question arises: what method may be used to realize

AGI?

Research direction

Natural

language processing:Frege

pointed out that “words and phrases are meaningful only in a

certain language environment.” Language environment is a very complex issue and has

therefore been avoided by scholars who directly focus their research on texts

instead. As symbols to generate thoughts, texts are like the graphs shown on

computer screens. As a matter of fact, graphs are signals from the host

computers, and behind the signals are the logics of various types of software.

Similarly, humans do not simply use their mouth to speak and their eyes to see;

all of the dialogue and observation are accomplished by brain, while the

sensory organs are only the signal outlets and receivers. There is a barrier

between the screen (languages) and the software (thinking). Some logics may be

deduced from colors on the screen, but it is nearly impossible to know what

software and logic codes are being used.

The NLP

method is only applicable to translation, which is like the filter application in

Photoshop that can convert an oil paint (English, in the NLP context) to a

sketch (Chinese, in the NLP context), in which the software keeps the graphic

content intact but does not understand it.

Machine

Learning:the “learning” refers

to the learning of unknown logics, and an adjective is used in the naming of

ML. ML is just a branch of statistics, which originates from the fact that events

are too complicated for humans to understand or develop clear logics for, and

thereby are only evaluated according to their representation. ML knows the

probability of a+b equaling 2 but does not know—and has

not considered—what 1+1 will equal. If ML algorithms were

irrelevant to human thinking, how would ML interact with humans? There is a

very complicated process prior to the onset of any event result, and the

process is very lengthy in time. ML excels in calculating the statistics of

event results but is unable to realize AGI.

Is it possible for AI to have a learning ability? The

difficulty for human brain to explore the world to obtain knowledge is totally

different than that for humans to creat a brain able to explore the world to

obtain knowledge. The former is an accomplishment of God, while the latter is

an accomplishment of humans. The viewpoint that AI may have a learning ability

or that AI can surpass humans is equivalent to saying that humans can surpass

God. Code can analyze the written logics but is unable to understand and derive

new logics. There is only one type of machine in the world that can derive

countless logics, which is human brain. The probability of AI having a learning

ability is far lower than that of the infinite monkey theorem, as the

possibility of AI understanding other logics is confined within a set of codes

without the same freedom as the one allowed in the natural selection

theory…

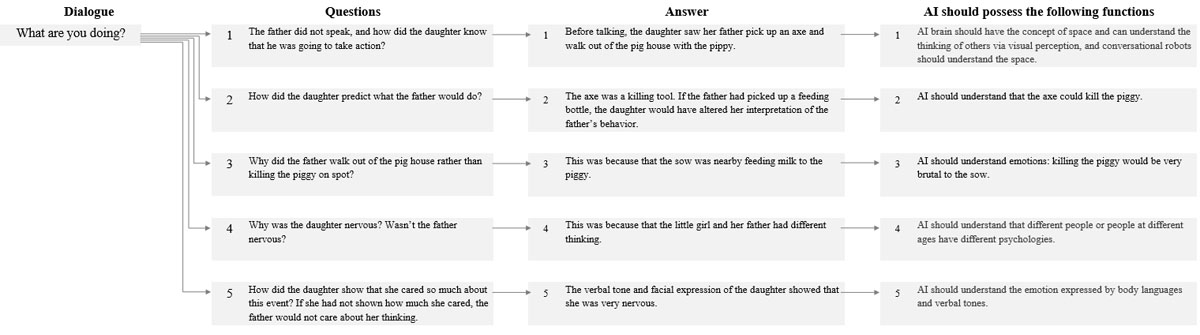

Let’s

take a look at how complex the first line on the script in the movie Charlotte’s Web is to the AI; the

daughter said: “What are you doing?”

Vector illustration View the address: https://drive.google.com/file/d/1uJ-W-0NDEUU6qZV-PBX6XoEFSyvciIAx/view?usp=sharing

Vector illustration View the address: https://drive.google.com/file/d/1uJ-W-0NDEUU6qZV-PBX6XoEFSyvciIAx/view?usp=sharing

The above questions are only a few summarized here. If

broken down, there would be tens of thousands of questions, and with the

missing of any question, the human-machine interaction would not be able to

proceed. What algorithms can solve questions expressed

in only a couple of words? NLP and ML are misleading to the general public

in that the algorithms are very complicated while the small number of words in

conversations look simple, and the contrast between complexity and simplicity

makes the general public convinced about NLP and ML, while the general public

has no idea that such small number of words have triggered such complicated

logics in the brain and do not know AI expresses those pre-set, meaningless

characters while their brain endows meaning or even emotion to the characters.

In order to achieve AGI, it is mandatory to put human thinking

under research, but the development of brain science is still at a rudimentary

stage with unclear theories and obvious paradoxes, which is a very big issue.

In this study, we proposed the usage of computers to construct a human thinking

framework, integrating HCI and brain logics one by one in the framework and

verifying the accuracy. With the gradual improvement of this framework, the

working principle of human thinking would be unveiled and AGI would be

achieved.

Overview

of the design

A human brain contains about 100 billion neurons to

form our consciousness, with each neuron comprised of about 2,000 branches.

Memories, logics, and values of a person are all processed by the neurons. When

learning, the brain stores the knowledge in some neurons to memories. Each time

when there is a new viewpoint, the neuron branches will establish a new

sequence to form a new logic. Therefore, the neurons are responsible for

memories, the connection among neuron branches for logics and the activities of

neurons account for logic operations.

Calculation:

sets (brain memories) are used as the roots, and functions (brain logics) are

used to calculate sets. In the following figure, dark dots a and b represent

two sets (neurons) while the blue lines represent subsets (neuron branches). When

a subset of a encounters a subset of b, they generate variables for each other,

allowing the sets of a and b to generate function values forming a new set. The

variables are infinitely applied among the subsets, with time as a scale of the

variables.

Any formula is composed of calculation elements and

logics (expression). For example, in the formula 0+1=1, the five elements 0, +,

1, =, 1 represent an AI set in this framework, and the logic of the expression

0+1=1 is analogous to an AI function. If the symbol {0} represents a set referred

to as “I” (a

“being”) and {1} represents a set referred to as the world, while {+}

represents a set of relations between “I” and the world, the function value of “I” is{0}{+}{1}={1},

meaning the change of “I” after the interaction of “I” with the world. In this study,{0}and{0}{+}{1}={1}are

used to construct all the behaviors and consciousness of AI while languages are

to narrate this group of formulas. In the following part, we will divide {0}

into infinitely small segments, for which the limit is dependent on our

intellectual demand for AI.

2. Spatial sets:

the entity sets in the above figure are shown in a plane due to the limitation

of Excel, and in practice, the entity sets should be situated in a 3D space

like that depicted by Google Earth.

Note:

the symbolic expression {0}{+}{1}={1}will be

replaced by other symbols in the following.

Memories

(set)

There are three types of sets: entity sets, space sets,

time sets

1.

Entity sets

AI consciousness, referred to by the red blocks in the

following figure, has the same definition as that of human consciousness, namely

“I think, therefore I am”, which implies that all of the objective world is

contained in AI consciousness including the body and logics. Sensory organs are

a type of information connectors between the objective world and the {world}.

If a human brain does not use sensory organs to understand the body, the

consciousness would not know whether the body exists, which can be depicted by

the phantom limb phenomenon in patients with amputation:a

soldier with a foot to be amputated, if not informed of the amputation before

surgery, would still feel the existence of the amputated foot when waking up

after the surgery, suggesting that foot amputation is not equivalent to the

deletion of neurons that have controlled the foot!

Note:

the structured sets depicted with dashed lines in the following figure are

nonexistent and shown here just to facilitate the reading.

As a person, {Theodore}— depicted by the yellow blocks

in the following figure—has nearly the same sets as AI, and moreover,

{Theodore}is a subset of Samantha (AI)(consciousness)! Given that interactive

computation is involved, if the consciousness of Samantha does not understand

Theodore, the computation cannot be implemented. Whether the subsets of

{Theodore}exist in the consciousness of Samantha depends on two factors: (1)

the volume of Theodore’s objective knowledge and (2) the degree of Samantha’s

understanding of Theodore. The yellow dash-line box represents that all of the

users known to Samantha are recorded here. It is preliminarily estimated that

about more than 1 million entity sets can meet the requirements of AGI.

Note:

The classification of the subordination relations between the human and AI sets

is not widely accepted to some extent. The authors think that the skin, muscles,

bones, and everything else of a person are all attached to the nerve system.

Sets are denoted by the symbol{ }and the

numbers inside. For example, {01-07-12} represents a leg set, with the later

number representing a subset of the earlier number; in this case, if the first

number 01 represents a person and the second number 07 represents a leg, the

third number 12 cannot denote any part outside of the leg.

3. Time sets:

given the limitation of Excel, the time is not specified like the space in the

sets. The role of time is to memorize and calculate the change, as shown in the

following figure. Memories are like movies, in which countless graphs are

overlaid to form moving images. After being played, the graphs become memories,

while the unplayed frames represent calculation that predicts when a result

would occur and what the result would be. Time is a metric for change. As shown

in the red boxes in the above figure, {time}is juxtaposed with {world} because

the entity and space sets really exist in reality, while time does not exist, being

only a product of human consciousness and a symbol that human brain uses to

measure a change of the world.

Each entity set contains four attributes: time, space,

function, and supplementary information. Strictly speaking, the statement

“there exists the following information for the set” is invalid for any set, as

the subsets of a set conflict with the information, and the reason why the

information attribute is attached to each set is the astonishingly large volume

of data, which means that even AI has been developed to a level as predicted in

science fiction movies, the large volume of data still cannot be handled by

either AI hardware or AI designers. In brief, the elements in the set framework

are to be used for collecting all the elements of thinking and are not

elaborated here. The logics (functions) are explained as follows.

Logics

(functions)

Function formulas are the logics of thinking of AI.

Each person or object in the world has a logic specific to that person or

object. There are four types of function formulas as shown by the blue blocks in

the set framework: AI, material, moving creatures, and plants. All these

function formulas belong to the consciousness subset of AI. Objective

consciousness and behaviors must be manifested in the consciousness of AI and

otherwise the objective world cannot be understood, making interaction

impossible. AI and mechanics differ most in their abilities for interaction.

Mechanics represent an absolute control by ego, with a component controlling

the components of its “subset” and so forth. In contrast, interaction

represents ego’s facing with the world. As shown in the following figure,

Samantha is with Theodore, Amy, Paul, weather, chair..., and the process of

their interaction is like a turn-based game in which each person or object is

undergoing change, and the change should be written into the set of Samantha as

denoted by the red lines in the following figure. In the turn-based game, the

independent variables in next turn are the current function values, and the

turn time is dependent on the person or object.

The function values of each set are obtained from

other sets. For example, as shown by the red dashed lines in the following

figure, {meal time}is affected by these sets: {temperament}, {sleep},

{depression}, {motion}…, and these sets are also affected by other sets.

The following structure diagram only shows a small

fraction of human function sets—humans and foods. Like sets, function formulas

can be broken down and have

subordination relations. The breakdown of function

formulas indicates that they are composed of massive function sets in a

hierarchy manner. The dashed lines do not bear any meaning and are used only

for better reading. The gray lines represent the sets that the functions may

trigger, while the blue lines represent the function-triggered sets. Of so many

gray line-represented sets, which sets will be triggered? What rules and

connections are there among the independent variables, operational signs,

variables and function values? Such logic is indicated by the effect of emotion

on thinking as elaborated in the later part of this paper.

Note: the subsets of the function framework are not

different than those of the set framework, but the two frameworks are

different.

The above figure looks like a series of computation

formulas, but it only actually illustrates a computation framework! The

framework is like a circuit board in which electrons are only allowed to move

in a circuit frame. If the function framework is fully unfolded, it would be

very astonishing; if the connections among all the subsets are fully designed

and included, the above structure diagram would be as big as a city rather than

a number of monitor screens. How does human thinking migrate in a large

framework? In the following part, the operation of human thinking is described

with texts.

Operation

of thinking (language environment and association)

Even if the phrase “language environment” contains the word “language”, a language environment is

not simply equivalent to a language, but refers to a thing or a “task” that is

being processed in human consciousness. If “tasks” are considered as various

types of software installed in a computer, then a language environment refers

to a specific software currently in operation. Human thinking has two major

characteristics: (1) it operates like a single-task operating system (internal language

environment) and (2) it has association, allowing a switch from the current

task (internal language environment) to another one (external language

environment). The {1-x-x} in the following figure denotes a set of a single

task (language environment), {2-x-x} denotes a set of another single task, and {3-x-x}

is another, and so forth. There are countless tasks in the brain, and only

after the current task is completed will the brain handle the next one and so

forth until the process is interrupted by—such as—association (labeled with the red lines) or a

third party.

Language

environment: a

single-task operating system

In contrast to a computer, human brain only operates

one task when thinking, which, in other words, means that human brain is a

single-task operating system and is unable to operate multiple tasks

simultaneously. For example, making a phone call while driving leads to a car

accident, as driving is a set of driving skills and making a phone call is a

set of conversational contents; the two sets have different logics, thereby

causing confusion when they are operated together. Someone may argue that he or

she often makes a phone call while still driving safely; this is just because the

logical thinking about another task has been completed during the driving and therefore

the thinking can be quickly switched from the thinking about driving to the

thinking about another task (there is a high frequency of switching). For

example, given a straight road with few vehicles, the brain has already figured

out how to cope with this situation and all the brain needs to do is simply

perform the task, thereby allowing the driver to drive while having a chat “simultaneously.” If all

of a sudden someone else is trying to overtake the driver, the driver has to

start over to think about the set of driving skills, and after the issue has

been solved, the driver would ask the chatter in the phone: “What did you

just say? Can you repeat it?...”Another

scenario may be like this: the chatter changes the topic during the

conversation and mentions an important topics like “you are fired by the company” or “the kids are bullied by

their classmates”, after which the drivers gets emotional and starts to

argue, asking for the reason; in such scenario, the issue is beyond the scope

of a simple and relaxing chat, and the brain has to think about and analyze

many things in an attempt to find a solution to the question of “Why did this happen”, thereby

making the driver forget about the driving at some point…

Chatting has a “single task” feature, too. The

chatters do not wish to have the language environment disrupted and otherwise they

would stray off the topic.

Tay needs to conform to the language environment when

chatting with humans. For example, if I say “please guess my most favorite food”

and Tay replies “meat”, after which I ask a question “what meat?” and Tay is

supposed to continue to complete this set; triggering of a sentence by lexical

meaning rather than by thinking would make the chatter stray off the topic in

the second round of dialogue.

Language is generated from the thought communication

among people, but thought communication has a very big issue, that is, thoughts

can be extremely complicated involving a very large volume of details, and if

each detail is presented it would require more than 10 hours rather than a few

seconds to finish a single sentence in a dialogue. Therefore, words and

sentences are, in a sense, a type of “commands”; a similar scenario is that

humans “communicate” with computer numerical control (CNC) machinery, in which

humans input simple commands and the machinery automatically completes a series

of operations. Similarly, when a person communicates with another person, each sentence

in the dialogue would trigger very complicated logics in the brain, and

therefore language—by nature—is a group of concise symbols that analyze and

organize thoughts, featuring both generality and abstractness in order to

facilitate interaction and communication, which thereby determines that (1)

language and texts cannot fully express thoughts but only activate them, and if

AI does not have thoughts, the analysis of texts would be totally meaningless,

and (2) words have meaning multidimensionality such as a word showing multiple

meanings (i.e., polysemy) or a world being used as a substitute word (e.g., a

pronoun), exclamation, adjective, or an allegorical word for the purpose of

conciseness. This determines that no matter how thorough and perfect the

semantic analysis is it would not be of great help to conversational robots,

because there are no logics that can link these words together.

How can

a dialogue be conducted in such language environment?

1. The

logic of thinking

In the form of a set:

As shown by the red A in the big structure diagram, I

ask Tay: “what meat?” The connotation of this sentence is: what is the subset

of meat food in the AI framework?

In the form of a function:

As shown in the following figure, language is a

reflection of thinking and manifests interaction among humans, while

interaction represents a relation among individuals, and therefore any language

has a primary subject-predicate-object structure, which refers to the function

framework in this study. All of the subject terms (I, independent variables), predicate terms (relations, operational signs) and

object terms (world, variables) are to “serve” the subject-predicate-object

structure.

Note:

Lexical classification performed in accordance with conventional linguistic

knowledge would lead to very poor logics, as linguistic knowledge is

established on the basis of human brain while human brain per se has processed

many logics.

During people-to-people

exchange, the grammar may be directly neglected sometimes and no

matter how the words are arranged, the true meaning of a dialogue would not be

misunderstood. For example, I ask “where

you going?” and you reply “I am going to eat a

meal.” or “Eat a

meal, me.” or “Eat a

meal.” with

all of these replies being understandable to me. Why?

For the reply “Eat a meal…me,” as shown by the red B

in the big structure diagram, the term “eat” indicates that the relation

between humans and food has been established—meal is to be eaten by humans, and

therefore even the grammatical structure is reversed in writing it would not

lead to a logic that the meal would eat humans.

For the reply “Eat a meal”, an issue arises: who is

going to eat a meal? The reason why I know who will be eating the meal is that

when I first asked my question I have already included you in the language

environment in which “you” are an independent variable, “eat” is an operational

sign, and “meal” is a dependent variable, and by asking “where you going?” I am

actually inquiring—by nature—what the function expression of the independent

variable is.

In the form of a loop:

As shown by the bold blue lines in the big structure

diagram, eating is a procedure to be conducted step by step, with each step

being necessary and, moreover, it is a looped procedure. If the user has

completed the set of “obtaining the food”, Tay should understand that “choosing

the food” is a past event and the previous sets are only to be recalled,

narrated and summarized, while the current set is to be performed right now and

the future sets are under planning.

2.

Grammatical and logical mistakes in language

As mentioned earlier in this study, language has

features of generality and abstractness, which inevitably leads to uncertainty

in language expression and especially when a language user is emotional,

causing frequent occurrence of mistakes in the language logics and grammars.

However, there are no mistakes in the user’s thinking, as he or she—as human

being—can recognize and correct the mistakes. How can AI achieve this?

Classification

of sets: emotion causes logic mistakes in language. For

example, the barber in the Russell’s paradox said “shave whiskers for all those

people in the city who do not shave their own whiskers.” In fact, the logic of

the hairdresser's thinking does not contradict, but with an arrogant psychology

and greediness for money the barber introduced paradox to his statement; if AI

understands the emotion of the barber, it should understand that the thinking

of the barber had already divided people in the city into two sets: the barber

himself in the city formed a vendor set, and the other people in the city

formed a consumer set.

Relativity:

Let’s suppose that in a hot summer, I was chatting with one of my friends who said “I am not

fear of coldness, and this damn summer is so bad” and I reply “I am not fear

of hotness.” What I have expressed should be understood in comparison to the

fear of coldness, and in fact, nobody does not fear hotness, as the logic that

humans fear hotness is absolutely valid, and therefore what I have expressed in

the dialogue is actually relative to coldness.

Language

environment: Let’s suppose

that there are a couple of lovers and the girl asks the boy “if you love me,

can you not look at any woman in the future?” In this scenario, the phrase “look”

is a substitute word, and a substitute word has far larger connotation than we

could imagine, not only including the words “she”, “that” and “this” but also

many others. As a matter of fact, any word, sentence or even an article can allude

to, describe or metaphorize something. How can AI make a proper judgment?All of

the logics of the word “look” can be listed and placed in a language

environment to make the word’s connotation clear.

3.

Effects of emotion on thinking

Depression is referred to as the “cold” in

psychological diseases. Although most people do not have depression, depressed

mood is common. Therefore, it is very important for AI to understand human

depressed mood. Without an understanding of depressed mood, AI would have

problems in its interaction with humans. With a depressed mood, a user

sometimes shows anorexia or gluttony. How can AI judge the inner heart of the

user via the diet abnormality and handle these scenarios? This logic is shortly

introduced here as depicted by the yellow dashed lines in the big structure

diagram.

Association

Association can be triggered via a variety of forms

like a graph—Theodore thinks of the lover when seeing the moon, or like an

object—Theodore goes shopping and when seeing umbrellas on the storage rack he

thinks of getting wet and cold yesterday, and therefore he buys an umbrella, or

like texts such as “lover” and “ideal” and especially those texts with

polysemy, which in short means that any text may trigger association.

How

does association switch language environments:

The switch condition: in most cases the brain does not

proactively switch language environments, and a switch is achieved only under

such circumstance that another language environment is more important and

appealing than the current one.

How to achieve a switch: an early-warning system is

constructed in the thinking of AI to compile the important events that have

occurred in the past or are likely to occur in the future; when a subset of the

current language environment triggers the early-warning system, AI starts to

analyze the importance of the two events and decides whether it is necessary

for it to switch to a different language environment, or after the users switch

the language environment, AI immediately knows the reason—namely that the

dialogue should be conducted in another language environment. An early-warning

system is a module that AI continuously performs at any time point. Although

the human brain is a single-task operating system, the association feature of

the brain indicates that there exists an “early-warning system” in the

consciousness, which also implies that human brain is not a single-task

operating system.

本作品采用知识共享署名-相同方式共享 4.0 国际许可协议进行许可。

评论